Read Project Arkham, Data centres are like Tribbles for Part 1 of this blog series.

There were two major areas to our DC consolidation project which needed to be tackled separately. Firstly, our core business of Zettagrid cloud runs 24x7 and secondly the underlying network with about 2500 last mile services (Fibre, NBN, 4G etc) which connect into Zettagrid could not be interrupted.

Starting with the cloud. We identified that two of the Zettagrid zones needed to be moved to longer term locations. The first was in Sydney (Vocus Doody St to Equinix SY4) and the other in Perth (Vocus DC2 to NextDC P1)

From a cloud (compute, storage) perspective the prospect of turning VMs off and shifting tonnes of servers and SANs to a new data centre isn't going to go down very well with our customers. How would you like an email that says, "we are going to power down that critical app for 2 days whilst we move our equipment to a new data centre". That kind of thing would make customers start looking for the exit.

In addition, the likelihood of being able to successfully power on a device once it has been shipped across the city after a couple of years of operating in a DC start to lower with every speed hump or pothole in the road the delivery truck drives over.

So given the 24x7 requirement from customers we really had one real option. Our approach was one of duplication. We were able to justify spending a bunch of money to buy the whole base compute and storage environment in a new data centre and copy everything live. We made our Dell and Juniper reps pretty happy.

For those interested in what we run, it is primarily a Dell/Juniper environment. At the moment we have a mix of 3 tier (compute/storage/network) and HCI in the environment. Most zones are 3 tier based upon Dell blade chassis with M and also Dell R series, various types of Dell EMC SANs (typically Compellent) and Juniper routing and switching (our ISP heritage shines through here). HCI is primarily based upon the Dell FX2 platform.

This solution made the migration strategy so much simpler and we took a mostly big bang approach. Essentially we would leave behind the old site along with the legacy it involved.

So after spending seven figures on new equipment (it blows me away that this small Australian cloud provider can get to a size that we can do this) we got the new kit installed in our new data centres. Then we had the fun part of moving the environment live.

- We installed the new equipment, Dell R640s, Dell SCV7020s, Dell SC420s, Juniper QFX5110s and Juniper MX204s.

- We established 10G Layer 2 links between each of the source (DC1) and destination (DC2) data centres.

- Spaned vLANs between both source DC1 and DC2.

- Stretched iSCSI between the data centres.

- Joined ESXi hosts in new DC2 to DC1 VMware Cluster.

- vMotioned VMs and Storage from ESXi hosts in DC1 to DC2.

- This gave us sufficient capacity to evacuate existing hosts in DC1 and physically move them to DC2. Once storage was vMotioned we also moved the SANs.

- Repeat step 7 about five times until everything is moved.

- Then clean up the routing and move it from DC1 to DC2.

The whole process took about a month for each zone.

The process was relatively transparent for customers. We did run into some major difficulties with some of the new hardware. We'd been running Dell R640s in production for some time with no troubles using the QLogic 57800 2x10Gb DA/SFP+ NICs. You can see what is going to happen...

When the first dozen new Dell R640s arrived they contained a newer Broadcom BMC57412 NIC. This was an untested NIC in our environment but was on the VMware HCL. We had so much trouble with the shipped Broadcom firmware crashing NICs and also crashing hosts that we opted to nuke the problem and replace every single Broadcom BMC57412 with the previous standard QLogic 57800 2x10Gb DA/SFP+ NIC. This firmware bug caused weeks of drama and delayed some of the project by at least 4 weeks. Lots of finger pointing between vendors and copious volumes of logs shipped. The lesson is that you need to check every single component when ordering!

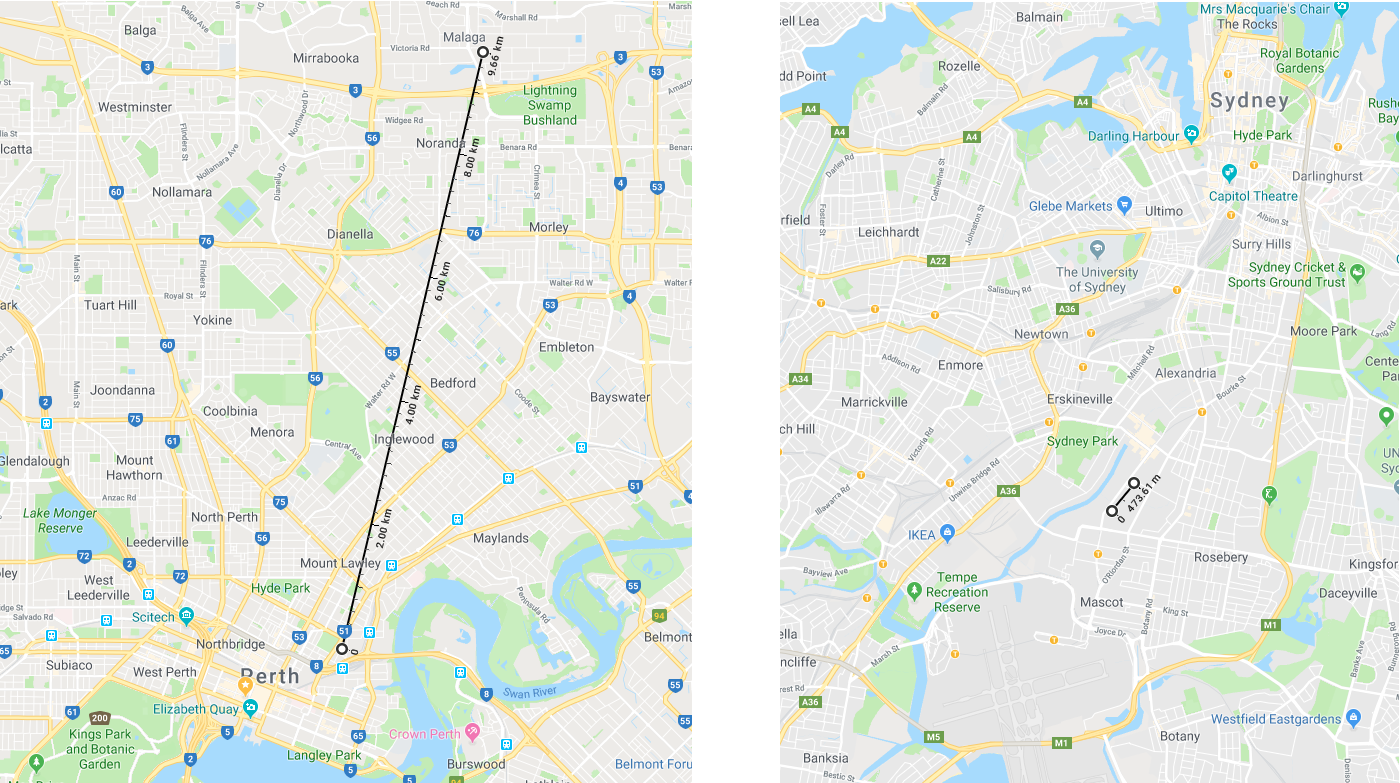

The live VMware vMotion between data centres of about 4000 VMs ranged from 473m to 9.6km. Apart from some increased latency due to all of the disk activity (sub 10ms) there were no issues with this at all. I still think it is amazing to be able to move a VM live without turning it off.

So far this part of the project didn't actually get us to the position of using any less data centres. It proved we could spend money and move thousands of VMs live (which is still really cool) but there was still more work to do.

TBC in Project Arkham, Part 3 - Do I cut the red cable or the blue cable?