I recommend you read Project Arkham Pt2 - Yes, Virtualisation is still amazing before reading blog post.

Automation for Migration

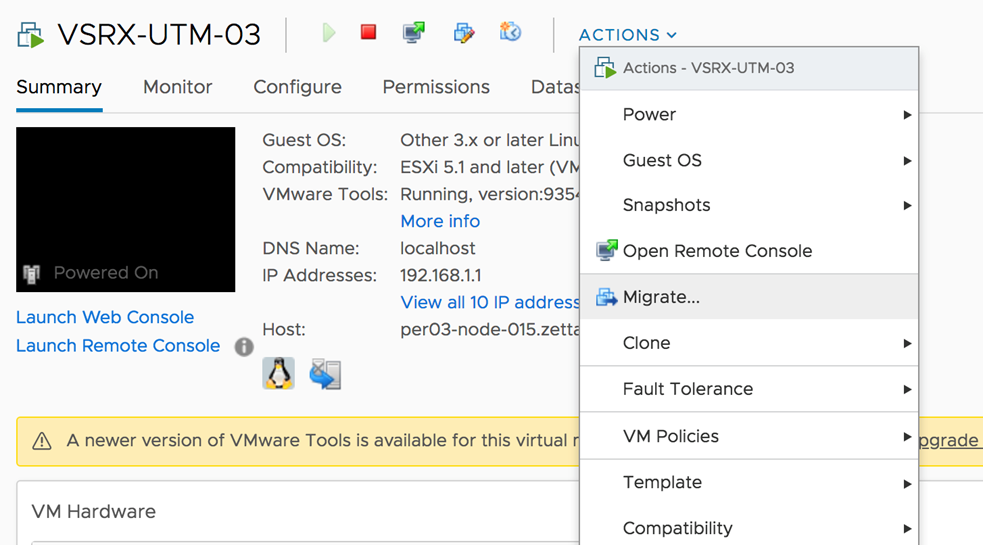

One thing that was missed during Part2 was some specific detail on how we performed the migration of VMs. In most cases you could use the UI to perform the migration. That only works during business hours with human interaction. You wouldn't want to do this 3500 times.

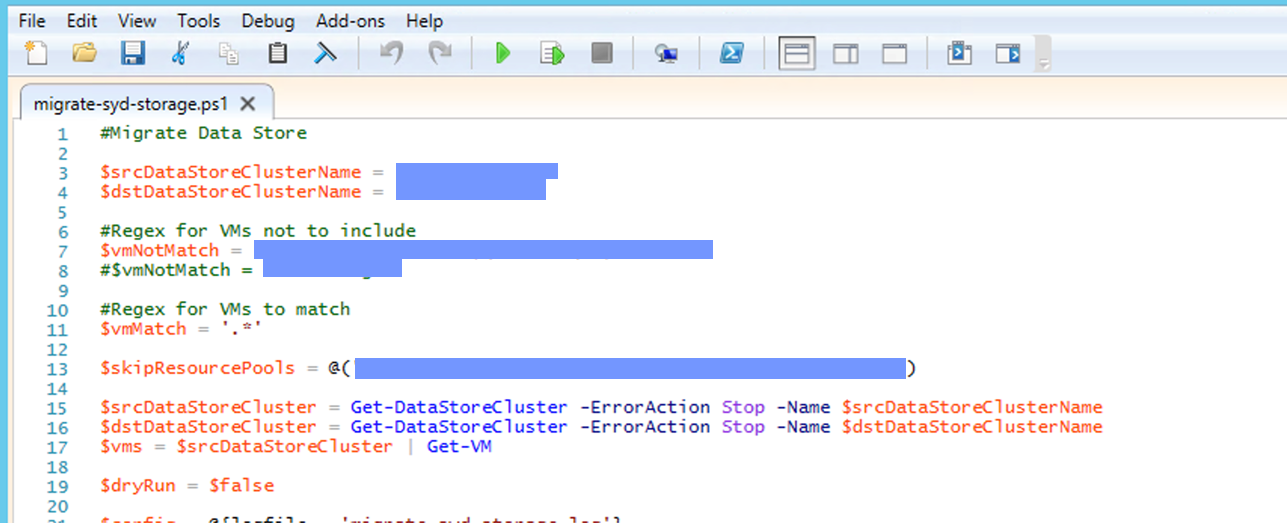

We went old school and used Powershell vMotion selected VMs in groups. This way we had complete control of the volume and timing of the vMotion.

Onto the Cleanup

The remaining part of the Arkham project was to clean up the network and close some data centres. This was always known to be the most difficult part of the project. When you have to deal with the physical world of racks, cross connects, telco aggregation ports and dark fibres there is just as much complexity as the virtual world but most is around coordinating third parties, logistics and contracts.

As the cloud has transformed billing models and jettisoned the concept of long contracts (to a large extent), the data centre and telco world still revolve around long term commit contracts in order for them to guarantee a return on their investment.

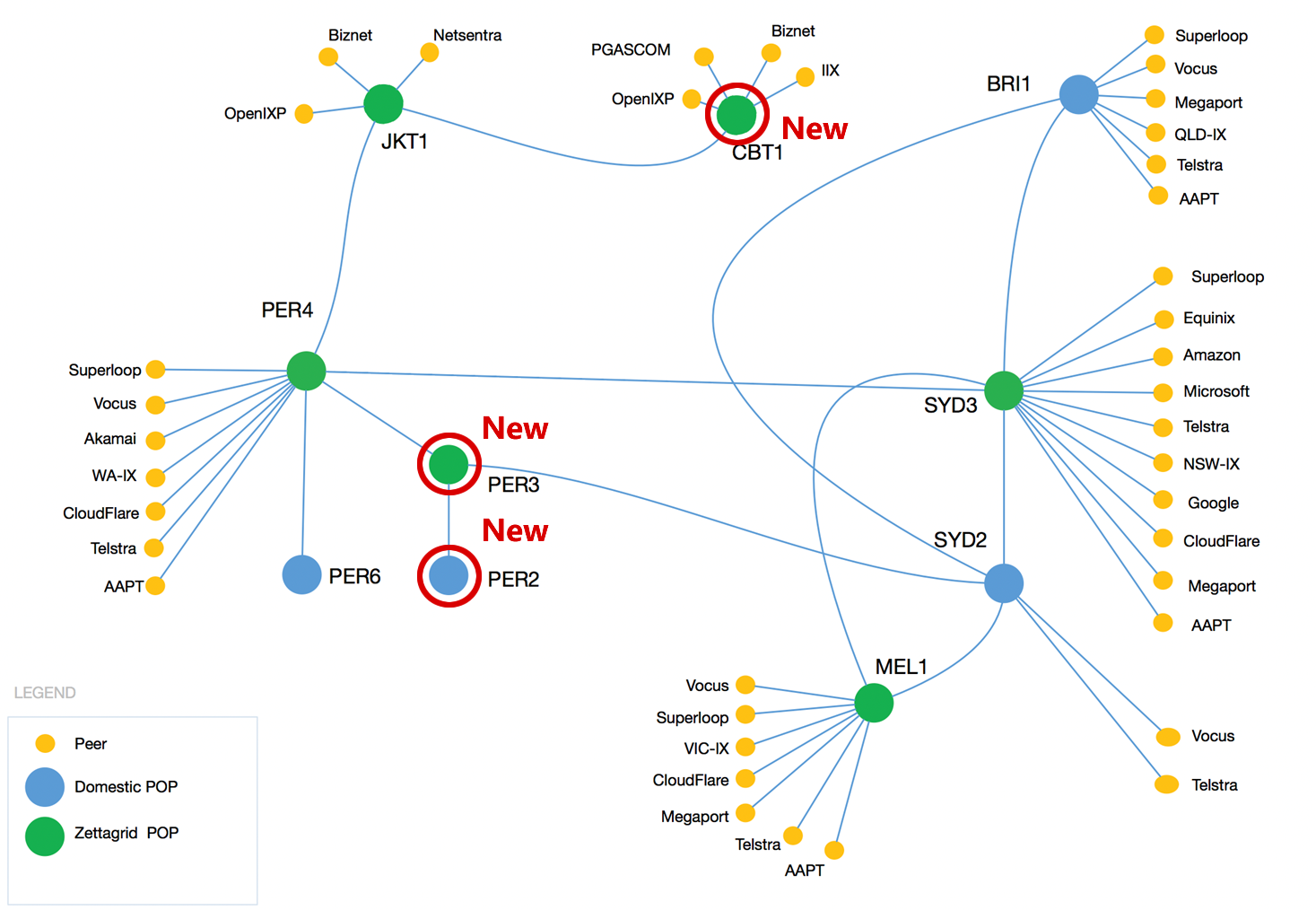

So after the successful moves in Sydney and Perth of 3500 VMs we were left with orphaned points of presence. In order to actually realise the savings for the project these needed to be decommissioned.

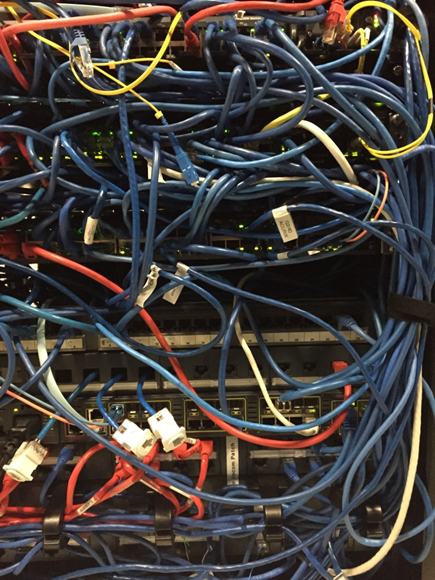

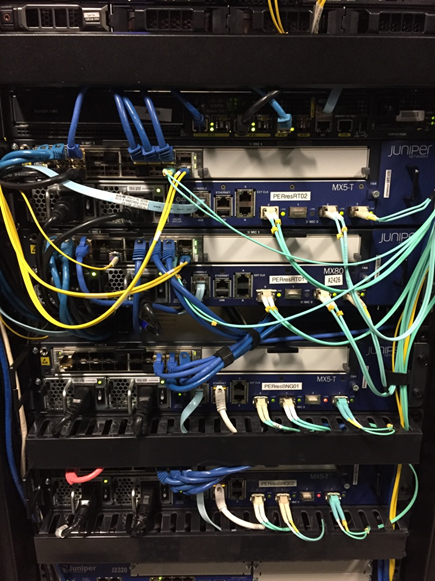

In Perth, we were closing two of our oldest data centre PoPs. We have been located in Vocus (Formerly Acure DC1) since 2004 which meant a LOT of history (aka MESS). Here are some examples:

And Vocus DC2 was our first ever Zettagrid zone. We had replaced the original equipment long long ago but we still had lots of cross connects and existing customer co-location.

So the task commenced to create new AG ports in Equinix and NextDC for AAPT, Telstra Wholesale and Vocus. It took about 4 months to arrange for services (Customer Links) to be migrated. Primarily it came down to finding a suitable time for everyone to be comfortable loosing a few packets during the cut-over.

A New Chapter Begins

We've finally got to the end of the migration project. We still have a few lingering services in some of our old data centres which cannot be closed. We closed at least a dozen racks and some dark fibre networks. However...

We've just bought Silverain and as such we've just extended our footprint by two new data centres and a bunch of racks. This has now been included in the project scope. Given our appetite for consolidating other cloud providers it seems the Arkham project will probably have a perpetual life within Zettagrid. We've also opened a second zone in Jakarta and so our position now is a net increase in data centre PoPs. ¯\_(ツ)_/¯

Some Lessons Learnt

The short version:

- Automation works

- Network built out with Ansible

- Virtualisation infrastructure with Salt

- Migrations with Powershell

- Vendor and distributor delays can add weeks

- Staff burnout

- Excess load/stress put on all while dealing with project issues and still doing BAU

- Too much after hours work